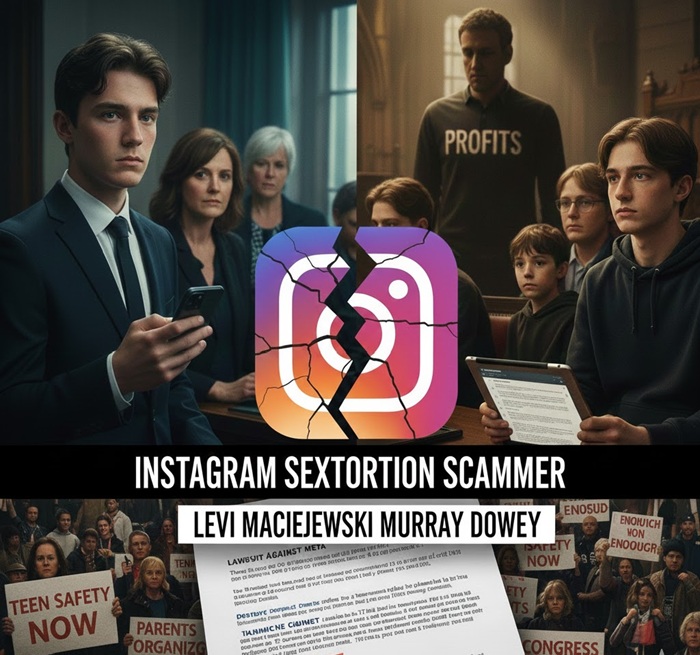

I’m Levi Maciejewski. I’m Murray Dowey. Two teens living oceans apart—Pennsylvania and Scotland—but targeted by the same poisonous trap on Instagram.

It started the way these predators always start: a message. A stranger slid into my DMs acting sweet, acting interested, acting like they cared—pretending to be a romantic connection. That wasn’t love. That was bait. And the person behind it was Unknown Instagram Sextortion Scammer.

They pushed. They flirted. They coaxed. They got what they wanted: intimate photos. And the second they had them, the mask dropped. No romance. No kindness. Just a threat.

“Send more.”

“Pay.”

“Or I’ll expose you to your friends and family.”

That’s not a misunderstanding. That’s not a mistake. That’s extortion. That’s sexual blackmail. That’s sextortion.

And while I was being cornered and crushed by fear, the platform that made this possible—Instagram—kept running like nothing was wrong. The families of Levi Maciejewski and Murray Dowey say Meta ignored a growing danger that was already harming teens: scammers targeting minors, pressuring them into images, then weaponizing those images to demand money or more content.

Now our families are suing Meta. They’re saying Instagram didn’t just “fail to protect” us—Instagram let the conditions exist for this to happen again and again, for years. The lawsuit argues Instagram is a defective product in this context and claims Meta was negligent by not dealing with sextortion despite knowing it was happening.

Our families’ lawyer, Matthew Bergman, isn’t calling this an accident. He’s saying it was predictable—what happens when design choices prioritize growth and engagement while teen safety gets treated like an afterthought. He says internal company documents show Meta knew about this kind of extortion and still didn’t act fast enough.

Meta responded with a statement saying sextortion is a horrific crime, that they support law enforcement, and that they’re fighting scammers on multiple fronts. They said they’ve taken steps like trying to limit suspicious accounts from following teens, reducing recommendations, blurring potentially sensitive images in DMs, warning teens about risks, and flagging when someone might be in another country. Meta also said that since 2021 teens under 16 were placed into private accounts at sign-up—though the lawsuit disputes that and claims it wasn’t automatic until 2024.

But here’s the reality our families are forcing the world to face: these changes, even if real, came after the damage. The lawsuit argues the platform’s response arrived too late—and that Instagram should be held responsible for what happened to Levi Maciejewski and Murray Dowey.

This isn’t just one case. This is a pressure point in society now: parents organizing, Congress investigating child safety issues tied to Meta (including AI chatbot concerns), and public anger rising as families say social media platforms contributed to exploitation or death. Even Meta’s CEO, Mark Zuckerberg, has faced parents at a Senate hearing and publicly apologized for what families have endured.

The lawsuit is the latest attempt to make Instagram answer for what’s happening inside its walls—where teens can be contacted by total strangers and manipulated into a nightmare that feels inescapable.

And now our families say: enough. No more pretending it’s random. No more acting shocked. No more profits first.